Several years ago, a new memory type began to penetrate the market. Intel had been developing the technology later branded Intel Optane since 2012. Optane technology delivered technical advances on a number of fronts, with the result being a kind of non-volatile memory that was almost as fast as volatile working memory (dynamic random-access memory or DRAM) and also had the ability to retain data when power was turned off. DRAM stays “lit” with information only as long as electricity courses through it. In most computers, DRAM is stationed where, for example, a running program or working data sits.

While Optane can’t quite match DRAM in speed, it has the advantage of persistence. That is, it doesn’t need electricity to retain its state. Persistence is often associated with magnetism. Hard disk drives and tapes use a magnetic field to set a location to either a one or a zero. Most solid state drives on the market today use a technology that changes a voltage state to alter a bit’s numerical value. Optane technology can flip bits one at a time by changing their electrical resistance instead, a more efficient process. This bit-addressability—which, like DRAM architecture, allows random access—gives Optane a speed advantage over even today’s SSDs, which require reading and writing in blocks of data.

All these tricks don’t come for free, and so Optane is priced between faster, volatile DRAM and the slower, persistent NAND modules used in SSDs. In a certain sense, Optane is a hybrid—not so much technologically, but on a feature basis.

Sits in the system between DRAM and NAND

From a market perspective, this combination of technical characteristics and economics has allowed Optane to insert itself into the memory/storage pool between DRAM and NAND. It fits nicely on a continuum of price and performance, helping smooth data’s pathway to and from increasingly distant portions of the pool. Optane provides a lower-cost alternative to expensive DRAM, allowing larger memory footprints for the same price, and much-faster-performing SSDs, which can act as fast storage caches in front of slower NAND storage. Thus, Optane fills a gap in the storage continuum between high-speed, expensive DRAM and less-expensive, slower NAND.

If the fastest, most expensive memory is right next to the central processor, the slowest, least expensive is far away.

At the very outer ring is good old reliable cheap magnetic tape, designed for huge reams of storage that few people want immediate access to. On the next ring in is found traditional magnetic hard drives with their rotating mechanical spindles. They are slow, but big and inexpensive. Good for long-term storage. Getting data from them is relatively easy if one is not in a hurry. Closer still, traditional NAND-based SSDs, faster and more expensive, can begin to participate in near-real-time analytics. These days, SSDs are freed from the communications constraint of the previous storage interface standard, SATA, which, while fast for its time, had become a bottleneck. Today’s SSDs take advantage of the Non-Volatile Memory Express (NVMe) standard, which is faster than any connection in the system except of the processor-memory link.

Then comes the Optane layer, which is really two layers, depending on the form factor.

SSDs based on Optane, using the fast NVMe channel, exceed what NAND-based SSDs can do. With this level of performance, Optane SSDs can greatly accelerate data access via a fast cache or high-speed storage tier. This capability is especially important for an online transaction processing (OLTP) system, in which access to data sets larger than the memory footprint are needed.

Also worth mentioning at this point is Optane drives’ endurance. With 20 times the life of a high-end enterprise class NAND SSD, Optane can perform many more read and write operations without wearing out, making it ideal for fast caching, which involves a constant flood of operations. A side benefit of this endurance is the ability to reduce the size of the caching layer because Optane doesn’t require the degree of over-provisioning necessary with NAND storage.

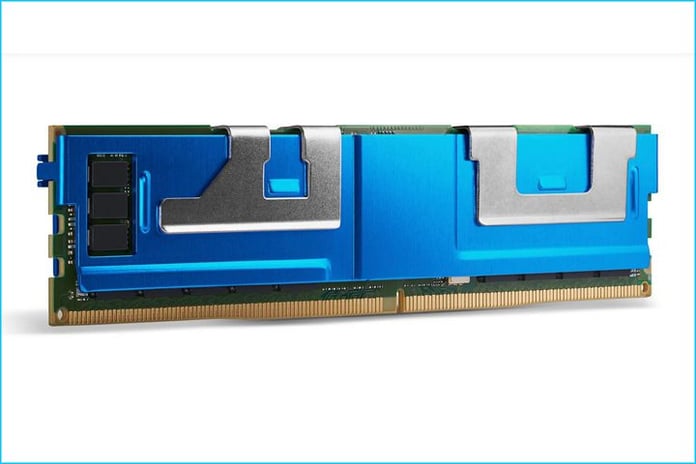

Closer in still, memory modules made with Optane technology can participate in operations even more tightly coupled to the processor. They can do this by way of the memory bus, a dedicated high-speed connection that memory shares with the processor. With this extra scooch of performance, Optane memory can stretch the capacity of DRAM to encompass some of the more challenging data analysis problems, such as large in-memory databases like SAP HANA or Oracle for real-time analytics or artificial intelligence.

An additional advantage of Optane memory is its persistence. One might ask: Why is persistence interesting if it’s only honored in the breach? That is, as long as the electricity doesn’t fail, why do you need it? After all, DRAM doesn’t have persistence, and many real-time analytical programs run just fine in main memory. The answer is: There’s an additional performance benefit with persistent memory, which is that the system doesn’t have to take the time to offload and save back vital data that must be replicated just in case of power loss. This step can be skipped with non-volatile memory, which will keep the data even if the juice cuts out. Although Optane persistent memory products are relatively new to the market, they have already won an innovation award and set a performance record.

Optane = Low latency

The discussion of Optane would not be compete without a reference to latency. A terrific advantage of Optane is its low latency. If speed measures how fast data moves through a channel, latency refers to how long a request has to wait before receiving data, essentially the startup overhead of a data request. With its bit-addressability, Optane can deliver any size data request with scant delay. This capability is particularly important when many, small data requests are made.

In NAND-based SSDs, which can address data only in blocks, this type of pattern quickly overwhelms the system’s responsiveness. By contrast, Optane SSDs deliver fast, consistent read latency, even under a heavy write load, a predictability associated with higher quality-of-service levels.

Next inward is the DRAM ring. As noted earlier, DRAM is fast, but expensive and volatile. To some degree, its speed is throttled by the memory bus, which, although quite fast, is not the last word. Because there are several more layers, all of them right on the processor die. These are the cache levels, up to three of them, which store temporary results from processor calculations. Relatively speaking, caches are small, super fast, very expensive, and fixed (their sizes are set in processor design and finalized in manufacturing).

Seen another way, the rings of the memory/storage pool can be represented as a pyramid,

which gives a notion of the size of each tier. At the bottom is the largest, slowest, least-expensive-per byte storage. At each level, quantity decreases while cost and performance rise.

With today’s storage elements, data can be moved up and down through the pyramid, depending on the degree to which it is needed for immediate computation. Intel has created tools to help application software engineers manage data location optimally.

Optane can deliver a big performance boost when used in front of a large array of magnetic storage. One example of an existing application of this type is SAP HANA. According to Intel executives, customers value Optane’s predictable performance, which consistently delivers a high quality of service per transaction.

In hyperconverged systems, where virtualization, compute, networking and storage subsystems can be configured by software, Optane provides a vital link between faster memory and slower magnetic storage, reducing system bottlenecks and allowing increased virtual machine density.

As mentioned earlier, the applications best able to take advantage of this smooth span of hierarchical memory and storage are in-memory analyses of large databases composed of mixed (structured and unstructured) elements. Today, such implementations are mostly found in the giant cloud service providers and the largest enterprises, which have the scale obtain the maximum benefit. Some very large enterprise customers can also reap these benefits. At some point, the service providers may be able to provide access to smaller customers as a service.

Most large hardware OEMs are adopting Optane in their converged products. For example, Dell’s highest-end VxRail hyperconverged infrastructure products feature both Optane persistent memory and Optane SSDs.

While still early days for Optane in the market, its promise is such that proliferation of Optane-enhanced systems is likely. More enterprises of all sizes will seek to distill instant wisdom from large, disparate sources of real-time data, and those unable to create and manage such hyperconverged systems themselves will likely turn to service providers for the capability.

Roger Kay is affiliated with PUND-IT Inc. and a longtime independent IT analyst.