The intensive demands of artificial intelligence, machine learning and deep learning applications challenge data center performance, reliability and scalability–especially as architects mimic the design of public clouds to simplify the transition to hybrid cloud and on-premise deployments.

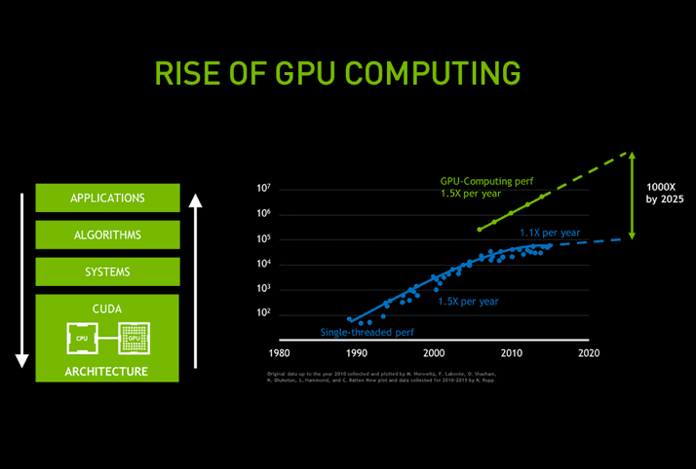

GPU (graphics processing unit) servers are now common, and the ecosystem around GPU computing is rapidly evolving to increase the efficiency and scalability of GPU workloads. Yet there are tricks to maximizing the more costly GPU utilization while avoiding potential choke points in storage and networking.

In this edition of eWEEK Data Points, Sven Breuner, field CTO, and Kirill Shoikhet, chief architect, at Excelero, offer nine best practices on preparing data centers for AI, ML and DL.

Data Point No. 1: Know your target system performance, ROI and scalability plans.

This is so they can dovetail with data center goals. Most organizations start with an initially small budget and small training data sets, and prepare the infrastructure for seamless and rapid system growth as AI becomes a valuable part of the core business. The chosen hardware and software infrastructure needs to be built for flexible scale-out to avoid disruptive changes with every new growth phase. Close collaboration between data scientists and system administrators is critical to learn about the performance requirements and get an understanding of how the infrastructure might need to evolve over time.

Data Point No. 2: Evaluate clustering multiple GPU systems, either now or for the future.

Having multiple GPUs inside a single server enables efficient data sharing and communication inside the system as well as cost-effectiveness, with reference designs presuming a future clustered use with support for up to 16 GPUs inside a single server. A multi-GPU server needs to be prepared to read incoming data at a very high rate to keep the GPUs busy, meaning it needs an ultra-high-speed network connection all the way through to the storage system for the training database. However, at some point a single server will no longer be enough to work through the grown training database in reasonable time, so building a shared storage infrastructure into the design will make it easier to add GPU servers as AI/ML/DL use expands.

Data Point No. 3: Assess chokepoints across the AI workflow phases.

The data center infrastructure needs to be able to deal with all phases of the AI workflow at the same time. Having a solid concept for resource scheduling and sharing is critical for cost effective data centers, so that while one group of data scientists get new data that needs to be ingested and prepared, others will train on their available data, while elsewhere, previously generated models will be used in production. Kubernetes has become a popular solution to this problem, making cloud technology easily available on premises and making hybrid deployments feasible.

Data Point No. 4: Review strategies for optimizing GPU utilization and performance.

The computationally intensive nature of many AI/ML/DL applications make GPU-based servers a common choice. However, while GPUs are efficient at loading data from RAM, training datasets usually far exceed RAM, and the massive number of files involved become more of a challenge to ingest. Achieving the optimal balance between the number of GPUs and the available CPU power, memory and network bandwidth–both between GPU servers and to the storage infrastructure–is critical.

Data Point No. 5: Support the demands of the training and inference phases.

In the classic example of training a system to “see” a cat, computers perform a numbers game–the computer (or rather the GPUs) need to see lots and lots of cats in all colors. Due to the nature of accesses consisting of massive parallel file reads, NVMe flash ideally supports these requirements by providing both the ultra-low access latency and the high number of read operations per second. In the inference phase, the challenge is similar, in that object recognition typically happens in real time–another use case where NVMe flash storage also provides a latency advantage.

Data Point No. 6: Consider parallel file systems and alternatives.

Parallel file systems like IBM’s SpectrumScale or BeeGFS can help in handling the metadata of a large number of small files efficiently, enabling 3X-4X faster analysis of ML data sets by delivering tens of thousands of small files per second across the network. Given the read-only nature of training data, it’s also possible to avoid the need for a parallel file system altogether when making the data volumes directly available to the GPU servers and sharing them in a coordinated way through a framework like Kubernetes.

Data Point No. 7: Choose the right networking backbone.

AI/ML/DL is usually a new workload, and backfitting it into existing network infrastructure often cannot support required low latency, high bandwidth, high message rate and smart offloads required for complex computations and fast and efficient data delivery. RDMA-based network transports RoCE (RDMA over Converged Ethernet) and InfiniBand have become the standards to meet these new demands.

Data Point No. 8: Consider four storage system levers to price/performance.

1) High read throughput combined with low latency, that doesn’t constrain hybrid deployments and can run on either cloud or on-premise resources.

2) Data protection. The AI/ML/DL storage system is typically significantly faster than others in the data center, so recovering it from backup after a complete failure might take a very long time and disrupt ongoing operations. The read-mostly nature of DL training makes it a good fit for distributed erasure coding where the highest level of fault tolerance is already built into the primary storage system, with a very small difference between raw and usable capacity.

3) Capacity elasticity to accommodate any size or type of drive, so that as flash media evolve and flash drive characteristics expand, data centers can maximize price/performance at scale, when it matters the most.

4) Performance elasticity. Since AI data sets need to grow over time to further improve the accuracy models, storage infrastructure should achieve a close-to-linear scaling factor, where each incremental storage addition brings the equivalent incremental performance. This allows organizations to start small and grow non-disruptively as business dictates.

Data Point No. 9: Set benchmarks and performance metrics to aid in scalability.

For example, for deep learning storage, one metric might be that each GPU processes X number of files (typically thousand or tens of thousands) per second, where each file has an average size of Y (from only a few dozen to thousands) KB. Establishing appropriate metrics upfront helps to qualify architectural approaches and solutions from the outset, and guide follow-on expansions.

If you have a suggestion for an eWEEK Data Points article, email cpreimesberger@eweek.com.