Large language models (LLMs) are advanced artificial intelligence models that use deep learning techniques, including a subset of neural networks known as transformers. LLMs use transformers to perform natural language processing (NLP) tasks like language translation, text classification, sentiment analysis, text generation, and question-answering.

LLMs are trained with a massive amount of datasets from a wide array of sources. Their immense size characterizes them – some of the most successful LLMs have hundreds of billions of parameters.

TABLE OF CONTENTS

Why Are Large Language Models Important?

The advancements in artificial intelligence and generative AI are pushing the boundaries of what we once thought of as far-fetched in the computing sector; LLMs are trained on hundreds of billions of parameters and are used to tackle the obstacles of interacting with machines in a human-like manner.

LLMs are beneficial for problem-solving and helping businesses with communication-related tasks, as they are used to generate human-like text, making them invaluable for tasks such as text summarization, language translation, content generation, and sentiment analysis.

Large language models bridge the gap between human communication and machine understanding. Aside from the tech industry, LLM applications can also be found in other fields like healthcare and science, where they are used for tasks like gene expression and protein design. DNA language models (genomic or nucleotide language models) can also be used to identify statistical patterns in DNA sequences. LLMs are also used for customer service/support functions like AI chatbots or conversational AI.

To understand the software that LLMs support, see our guide: Top Generative AI Apps and Tools

How Do Large Language Models Work?

For a LLM to perform efficiently with precision, it’s first trained on a large volume of data, often referred to as a corpus of data. The LLM is usually trained with both unstructured and structured data before going through the transformer neural network process.

After pre-training on a large corpus of text, the model can be fine-tuned on specific tasks by training it on a smaller dataset related to that task. LLM training is primarily done through unsupervised, semi-supervised, or self-supervised learning.

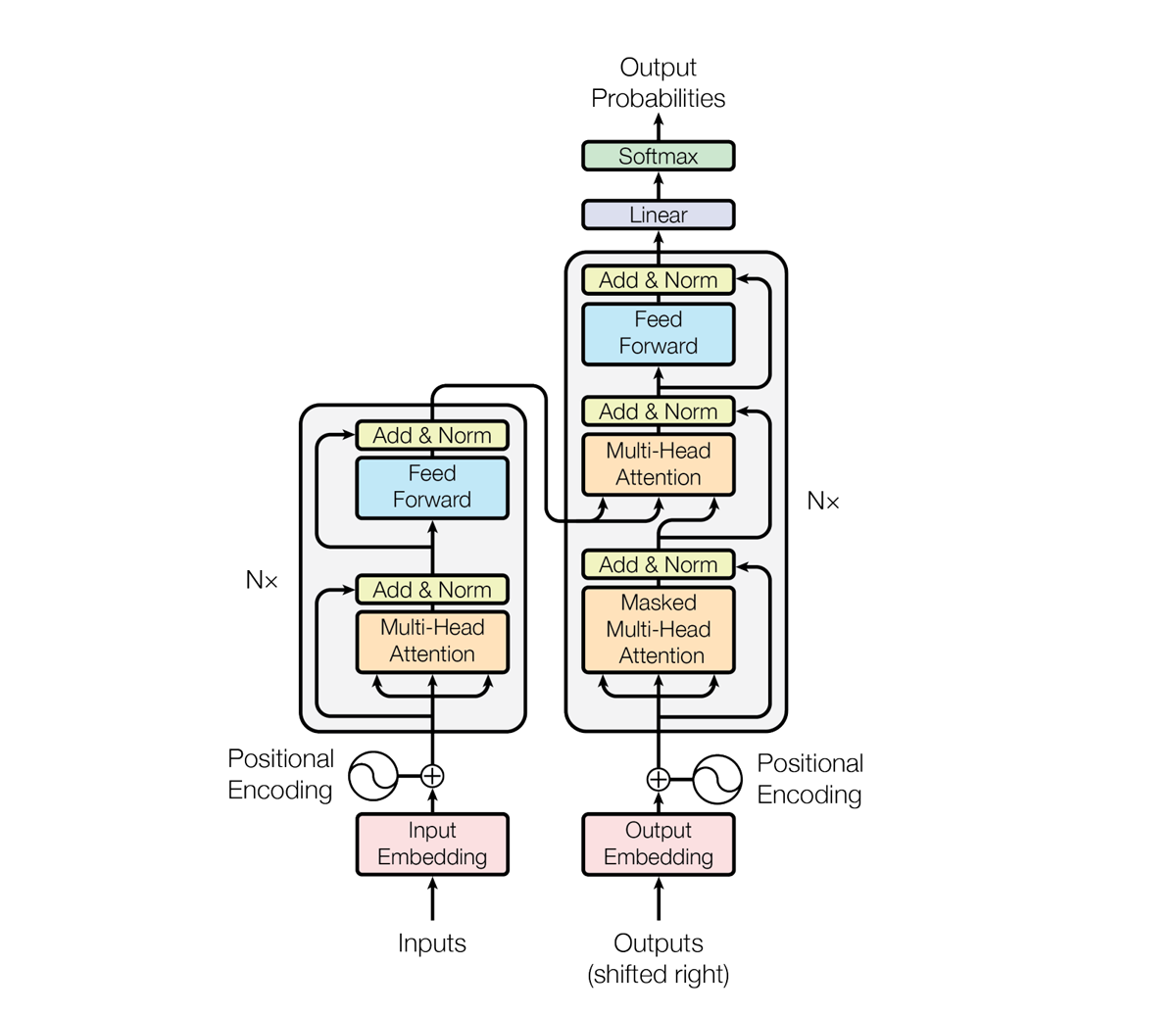

Large language models are built on deep learning algorithms called transformer neural networks, which learn context and understanding through sequential data analysis. The concept of the Transformer was introduced in a 2017 paper titled “Attention Is All You Need” by Ashish Vaswani, Noam Shazeer, Niki Parmar, and five other authors. The transformer model uses an encoder-decoder structure; it encodes the input and decodes it to produce an output prediction. The following graphics are from their paper:

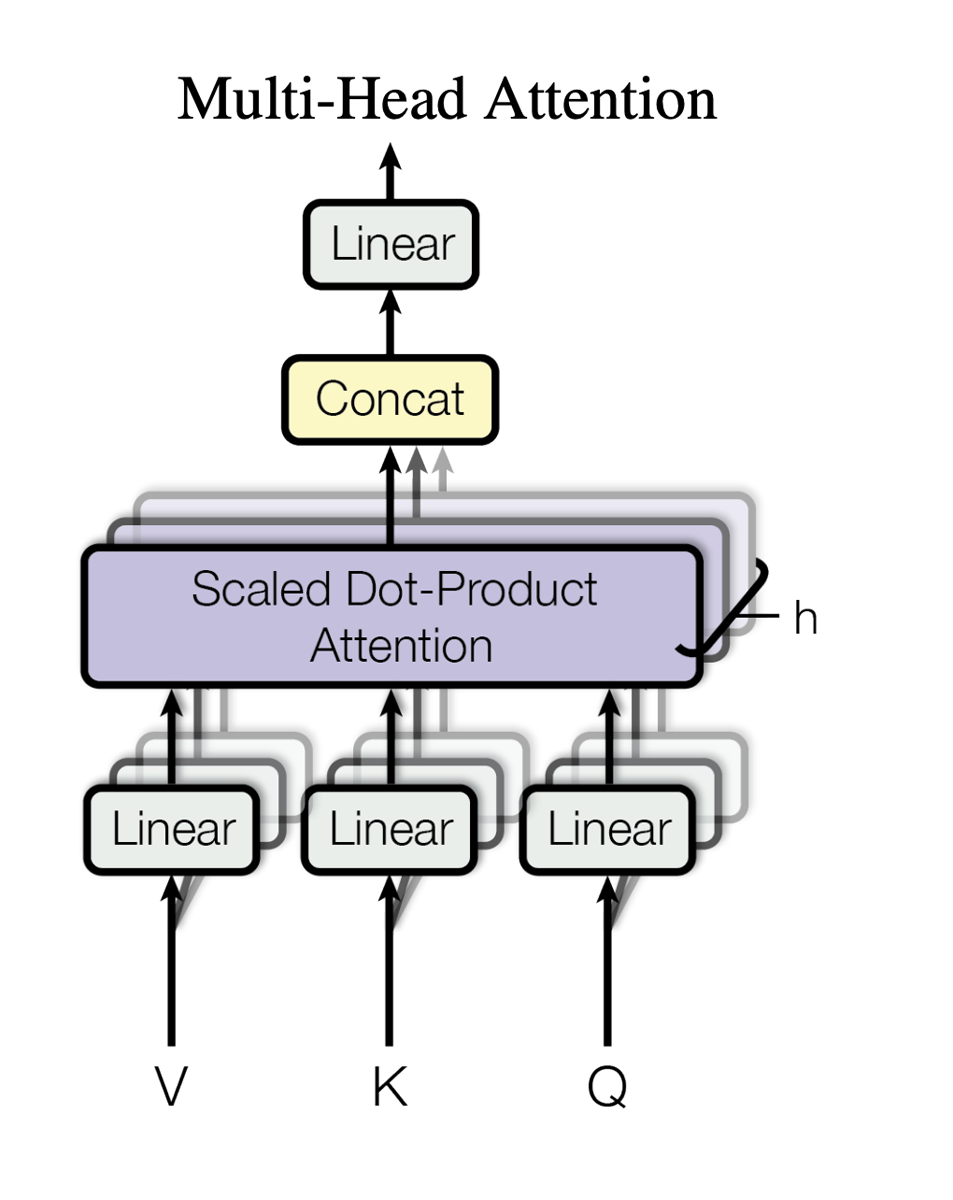

Multi-head self-attention is another key component of the Transformer architecture, and it allows the model to weigh the importance of different tokens in the input when making predictions for a particular token. The “multi-head” aspect allows the model to learn different relationships between tokens at different positions and levels of abstraction.

To learn about the industry-leading AI software that LLMs support, read our guide: Best Artificial Intelligence Software

4 Large Language Model Types

The common types of LLMs are Language Representation, Zero-Shot, Multimodal and Fine-Tuned. The details of these LLM are as follows:

Language Representation Model

Many NLP applications are built on language representation models (LRM) designed to understand and generate human language. Examples of such models include GPT (Generative Pre-trained Transformer) models, BERT (Bidirectional Encoder Representations from Transformers), and RoBERTa. These models are pre-trained on massive text corpora and can be fine-tuned for specific tasks like text classification and language generation.

Zero-Shot Model

Zero-shot models are known for their ability to perform tasks without specific training data. These models can generalize and make predictions or generate text for tasks they have never seen before. GPT-3 is an example of a zero-shot model – it can answer questions, translate languages, and perform various tasks with minimal fine-tuning.

Multimodal Model

LLMs were initially designed for text content. However, multimodal models work with both text and image data. These models are designed to understand and generate content across different modalities. For instance, OpenAI’s CLIP is a multimodal model that can associate text with images and vice versa, making it useful for tasks like image captioning and text-based image retrieval.

Fine-Tuned Or Domain-Specific Models

While pre-trained language representation models are versatile, they may not always perform optimally for specific tasks or domains. Fine-tuned models have undergone additional training on domain-specific data to improve their performance in particular areas. For example, a GPT-3 model could be fine-tuned on medical data to create a domain-specific medical chatbot or assist in medical diagnosis.

Generative AI companies are pioneers in using LLMs – to learn more, read our guide: Generative AI Companies: Top 12 Leaders

Large Language Model Examples

You might have heard of GPT – thanks to ChatGPT buzz, a generative AI chatbot launched by Open AI in 2022. Aside from GPT, there are other noteworthy large language models.

- Pathways Language Model (PaLM): PaLM is a 540-billion parameter transformer-based LLM developed by Google AI. As of this writing, PaLM 2 LLM is currently being used for Google’s latest version of Google Bard.

- XLNet: XLNet is an autoregressive Transformer that combines the bidirectional capability of BERT and the autoregressive technology of Transformer-XL to improve the language modeling task. It was developed by Google Brain and Carnegie Mellon University researchers in 2019 and can perform NLP tasks like sentiment analysis and language modeling.

- BERT: Bidirectional Encoder Representations from Transformers is a deep learning technique for NLP developed by Google Brain. BERT can be used to filter spam emails and improve the accuracy of the Smart Reply feature.

- Generative pre-trained transformers (GPT): Developed by OpenAI, GPT is one of the best-known large language models. It has undergone different iterations, including GPT-3 and GPT-4. The model can generate text, translate languages and answer your questions in an informative way.

- LLaMA: Large Language Model Meta AI was publicly released in February 2023, with four model sizes: 7, 13, 33, and 65 billion parameters. Meta AI released LLaMA 2 in July 2023, available in three versions, including 7B, 13B, and 70B parameters.

7 Large Language Model Use Cases

While LLMs are still under development, they can assist users with various tasks and serve their needs in various fields, including education, healthcare, customer service, and entertainment. Some of the common purposes of LLMs are:

- Language translation: LLMs can generate natural-sounding translations across multiple languages, enabling businesses to communicate with partners and customers in different languages.

- Code and text generation: Language models can generate code snippets, write product descriptions, create marketing content, or even draft emails.

- Question answering: Companies can use LLMs in customer support chatbots and virtual assistants to provide instant responses to user queries without human intervention.

- Education and training: The technology can generate personalized quizzes, provide explanations, and give feedback based on the learner’s responses.

- Customer service: LLM is one of the underlying technologies for AI-powered chatbots used by companies to automate customer service in their organization.

- Legal research and analysis: Language models can assist legal professionals in researching and analyzing case laws, statutes, and legal documents.

- Scientific research and discovery: LLMs contribute to scientific research by helping scientists and researchers analyze and process large volumes of scientific literature and data.

4 Advantages of Large Language Models

LLMs offer an enormous potential productivity boost for organizations, making it a valuable asset for organizations that generate large volumes of data. Below are some of the benefits LLMs deliver to companies that leverage its capabilities.

Increased Efficiency

LLMs ability to understand human language makes them suitable for completing repetitive or laborious tasks. For context, LLMs can generate human-like text much faster than humans, making it advantageous for tasks like content creation, writing code or summarizing large amounts of information.

Enhanced Question-Answering Capabilities

LLMs can also be described as an answer-generation machine. LLMs are so good at generating accurate responses to user queries so much that experts had to weigh in to convince users that generative AIs will not replace the Google search engine.

Few-Shot or Zero-Shot Learning

LLMs can perform tasks with minimal training examples or without any training at all. They can generalize from existing data to infer patterns and make predictions in new domains.

Transfer Learning

LLMs serve professionals across various industries — they can be fine-tuned across various tasks, enabling the model to be trained on one task and then repurposed for different tasks with minimal additional training.

Challenges of Large Language Models

While LLMs offer many benefits, they also have some noteworthy drawbacks that may affect the quality of results.

Performance Depends On Training Data

The performance and accuracy of LLMs rely on the quality and representativeness of the training data. LLMs are only as good as their training data, meaning models trained with biased or low-quality data will most certainly produce questionable results. This is a huge potential problem as it can cause significant damage, especially in sensitive disciplines where accuracy is critical, such as legal, medical, or financial applications.

Lack Of Common Sense Reasoning

Despite their impressive language capabilities, large language models often struggle with common sense reasoning. For humans, common sense is inherent – it’s part of our natural instinctive quality. But for LLMs, common sense is not in fact common, as they can produce responses that are factually incorrect or lack context, leading to misleading or nonsensical outputs.

Ethical Concerns

The use of LLMs raises ethical concerns regarding potential misuse or malicious applications. There is a risk of generating harmful or offensive content, deep fakes, or impersonations that can be used for fraud or manipulation.

Common Large Language Model Tools

While there are wide variety of LLM tools – and more are launched constantly – the following are among the most common you’ll encounter in the generative AI landscape:

- OpenAI API: The company (OpenAI) provides an API that lets developers interact with their LLMs. Users can make requests to the API to generate text, answer questions, and perform language translation tasks.

- Hugging Face Transformers: The Hugging Face Transformers library is an open source library providing pre-trained models for NLP tasks. It supports models like GPT-2, GPT-3, BERT, and many others.

- PyTorch: LLMs can be fine-tuned using deep learning frameworks like PyTorch. For example, OpenAI’s GPT can be fine-tuned using PyTorch.

- spaCy: spaCy is a library for advanced natural language processing in Python. While it may not directly handle LLM, it’s commonly used for various NLP tasks such as linguistically motivated tokenization, part-of-speech tagging, named entity recognition, dependency parsing, sentence segmentation, text classification, lemmatization, morphological analysis, and entity linking.

Large Language Models In The Future

As LLMs mature, they will improve in all aspects. Future evolutions may be able to generate more coherent responses, including improved methods for bias detection, mitigation, and increased transparency, making it a trusted and reliable resource for users in industries like finance, content creation, healthcare and education.

In addition, there will be a far greater number and variety of LLMs, giving companies more options to choose from as they select the best LLM for their particular artificial intelligence deployment. Similarly, customization of LLMs will become far easier, and far more specific, which will allow each piece of AI software to be fine-tuned to be faster and far more efficient and productive.

It’s also likely (though not yet known) that large language models will be considerably less expensive, allowing smaller companies and even individuals to leverage the power and potential of LLMs.

Bottom Line: Large Language Models

Large language models represent a transformative leap in artificial intelligence and have revolutionized industries by automating language-related processes.

The versatility and human-like text-generation abilities of large language models are reshaping how we interact with technology, from chatbots and content generation to translation and summarization. However, the deployment of large language models also comes with ethical concerns, such as biases in their training data, potential misuse, and the privacy considerations of their training. Balancing their potential with responsible and sustainable development is essential to harness the benefits of large language models.

For a full portrait of leading AI companies advancing LLM technology, read our guide: 150+ Top AI Companies 2024