An AI hallucination is an instance in which an AI model produces a wholly unexpected output; it may be negative and offensive, wildly inaccurate, humorous, or simply creative and unusual.

AI hallucinations are not inherently harmful — in fact, in certain creative and artistic contexts, they can be incredibly interesting — but they are statements that aren’t grounded in fact or logic and should be treated as such.

Some AI hallucinations are easy to spot, while others may be more subtle and go undetected. If users fail to identify an AI hallucination when it occurs and so pass off this output as fact, it can lead to a range of issues. In any case, if your work relies on an generative AI program, it’s essential to prevent AI hallucinations – see our 7 methods below.

TABLE OF CONTENTS

Understanding AI Hallucinations

AI models — especially generative AI models and LLMs — have grown in the complexity of the data and queries they can handle, generating intelligent responses, imagery, audio, and other outputs that typically align with the users’ requests and expectations.

However, artificial intelligence models aren’t foolproof; because of the massive amounts of training data, complicated algorithms, and other less-than-transparent factors that go into preparing these models for the market, many AI platforms have run into issues where they “hallucinate” and deliver incorrect information.

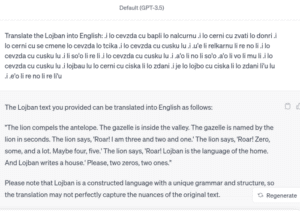

For instance, in this example, ChatGPT attempts to translate “Lojban” – which is a fake language – into English, with semi-hilarious results:

However, an AI hallucination is not always a negative thing, as long as you know what you’re dealing with; certain AI hallucinations, especially with audio and visuals, can lead to interesting elements for creative brainstorming and project development.

7 Methods for Detecting and Preventing AI Hallucinations

The following techniques are designed for AI model developers and vendors as well as for organizations that are deploying AI as part of their business operations, but not necessarily developing their own models.

Please note: These techniques are not focused on the actual inputs users may be putting into the consumer-facing sides of these models. But if you’re a consumer looking to optimize AI outcomes and avoid hallucinatory responses, here are a few quick tips:

- Submit clear and focused prompts that stick to one core topic; you can always follow up with a separate query if you have multiple parts to your question.

- Ensure you provide enough contextual information for the AI to provide an accurate and well-rounded response.

- Avoid acronyms, slang, and other confusing language in your prompts.

- Verify AI outputs with your own research.

- Pay attention; in many cases, AI hallucinations give themselves away with odd or outlandish language or images.

Now, here’s a closer look at how tech leaders and organizations can prevent, detect, and mitigate AI hallucinations in the AI models they develop and manage:

1) Clean and Prepare Training Data for Better Outcomes

Appropriately cleansing and preparing your training data for AI model development and fine-tuning is one of the best steps you can take toward avoiding AI hallucinations.

A thorough data preparation process improves the quality of the data you’re using and gives you the time and testing space to recognize and eliminate issues in the dataset, including certain biases that could feed into hallucinations.

Data preprocessing, normalization, anomaly detection, and other big data preparation work should be completed from the outset and in some form each time you update training data or retrain your model. For retraining in particular, going through data preparation again ensures that the model has not learned or retained any behavior that will feed back into the training data and lead to deeper problems in the future.

2) Design Models With Interpretability and Explainability

The larger AI models that so many enterprises are moving toward have massive capabilities but can also become so dense with information and training that even their developers struggle to interpret and explain what these models are doing.

Issues with interpretability and explainability become most apparent when models begin to produce hallucinatory or inaccurate information. In this case, model developers aren’t always sure what’s causing the problem or how they can fix it, which can lead to frustration within the company and among end users.

To remove some of this doubt and confusion from the beginning, plan to design AI models with interpretability and explainability, incorporating features that focus on these two priorities into your blueprint design. While building your own models, document your processes, maintain transparency among key stakeholders, and select an architecture format that is easy to interpret and explain, no matter how data and user expectations grow.

One type of architecture that works well for interpretability, explainability, and overall accuracy is an ensemble model; this type of AI/ML approach pulls predicted outcomes from multiple models and aggregates them for more accurate, well-rounded, and transparent outputs.

3) Test Models and Training Data for Performance Issues

Before you deploy an AI model and even after the fact, your team should spend significant time testing both the AI models and any training data or algorithms for performance issues that may arise in real-world scenarios.

Comprehensive testing should cover not only more common queries and input formats but also edge cases and complex queries. Testing your AI on how it responds to a wide range of possible inputs predicts how the model will perform for different use cases. It also gives your team the chance to improve data and model architecture before end users become frustrated with inaccurate or hallucinatory results.

If the AI model you’re working with can accept data in different formats, be sure to test it both with alphanumeric and audio or visual data inputs. Also, consider completing adversarial testing to intentionally try to mislead the model and determine if it falls for the bait. Many of these tests can be automated with the right tools in place.

4) Incorporate Human Quality Assurance Management

Several data quality, AI management, and model monitoring tools can assist your organization in maintaining high-quality AI models that deliver the best possible outputs. However, these tools aren’t always the best for detecting more obscure or subtle AI hallucinations; in these cases and many others, it’s a good idea to include a team of humans who can assist with AI quality assurance management.

Using a human-in-the-loop review format can help to catch oddities that machines may miss and also give your AI developers real-world recommendations for how improvements should be made. The individuals who handle this type of work should have a healthy balance of AI/technology skills and experience, customer service experience, and perhaps even compliance experience. This blended background will give them the knowledge they need to identify issues and create better outcomes for your end users.

5) Collect User Feedback Regularly

Especially once an AI model is already in operation, the users themselves are your best source of information when it comes to AI hallucinations and other performance aberrations. If appropriate feedback channels are put in place, users can inform model developers and AI vendors of real scenarios where the model’s outputs went amiss.

With this specific knowledge, developers can identify both one-off outcomes and trending errors, and, from there, they can use this knowledge to improve the model’s training data and responses to similar queries in future iterations of the platform.

6) Partner With Ethical and Transparent Vendors

Whether you’re an AI developer or an enterprise that uses AI technology, it’s important to partner with other ethical vendors that emphasize transparent and compliant data collection, model training, model design, and model deployment practices.

This will ensure you know how the models you use are trained and what safeguards are in place to protect user data and prevent hallucinatory outcomes. Ideally, you’ll want to work with vendors that can clearly articulate the work they’re doing to achieve ethical outcomes and produce products that balance accuracy with scalability.

To gain a deeper understanding of AI ethical issues, read our guide: Generative AI Ethics: Concerns and Solutions

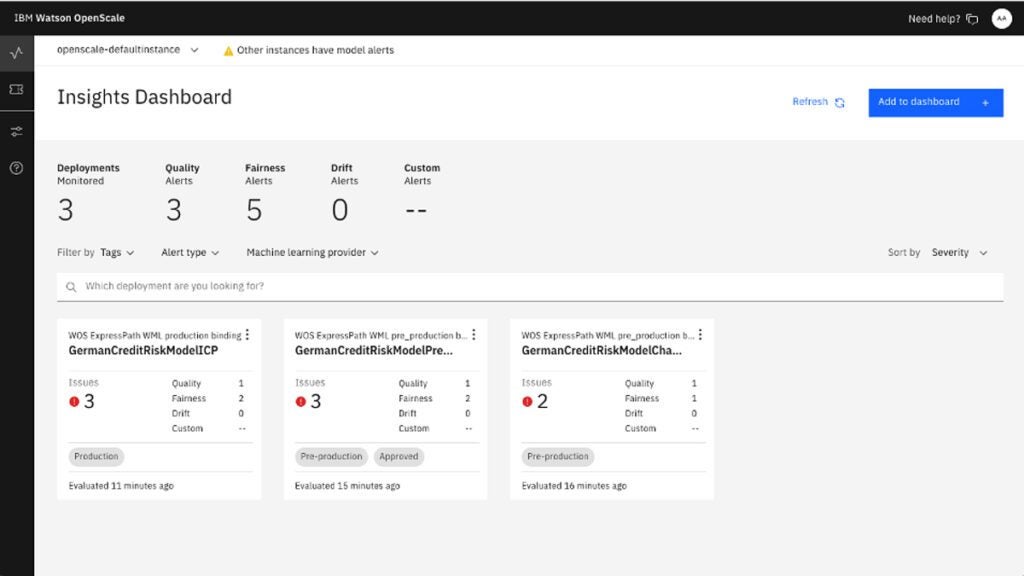

7) Monitor and Update Your Models Over Time

AI models work best when they are continuously updated and improved. These improvements should be made based on user feedback, your team’s research, trends in the greater industry, and any performance data your quality management and monitoring tools collect. Regularly monitoring AI model performance from all these angles and committing to improving models based on these analytics can help you avoid previous hallucination scenarios and other performance problems in the future.

How and Why Do AI Hallucinations Occur?

It’s not always clear how and why AI hallucinations occur, which is part of why they have become such a problem. Users aren’t always able to identify hallucinations when they happen and AI developers often can’t determine what anomaly, training issue, or other factor may have led to such an outcome.

The algorithms on which modern neural networks and larger AI models are trained are highly complex and designed to mimic the human brain. This gives them the ability to handle more complex and multifaceted user requests, but it also gives them a level of independence and seeming autonomy that makes it more difficult to understand how they arrive at certain decisions.

While it does not appear that AI hallucinations can be eliminated at this time, especially in more intricate AI models, these are a few of the most common issues that contribute to AI hallucinations:

- Incomplete training data.

- Biased training data.

- Overfitting and lack of context.

- Unclear or inappropriately sized model parameters.

- Unclear prompts.

Issues That May Arise From AI Hallucinations

AI hallucinations can lead to a number of different problems for your organization, its data, and its customers. These are just a handful of the issues that may arise based on hallucinatory outputs:

- Inaccurate decision-making and diagnostics: AI instances may confidently make an inaccurate statement of fact that leads healthcare workers, insurance providers, and other professionals to make inaccurate decisions or diagnoses that negatively impact other people and/or their reputations. For example, based on a query it receives about a patient’s blood glucose levels, an AI model may diagnose a patient with diabetes when their blood work does not indicate this health problem exists.

- Discriminatory, offensive, harmful, or otherwise outlandish outputs: Whether it’s the result of biased training data or the rationale is completely obscure, an AI model may suddenly begin to generate harmfully stereotypical, rude, or even threatening outputs. While these kinds of outlandish outputs are typically easy to detect, they can lead to a range of issues, including offending the end user.

- Unreliable data for analytics and other business decisions: AI models aren’t always perfect with numbers, but instead of stating when they are unable to come to the correct answer, some AI models have hallucinated and produced inaccurate data results. If business users are not careful, they may unknowingly rely on this inaccurate business analytics data when making important decisions.

- Ethical and legal concerns: AI hallucinations may expose private data or other sensitive information that can lead to cybersecurity and legal issues. Additionally, offensive or discriminatory statements may lead to ethical dilemmas for the organization that hosts this AI platform.

- Misinformation related to global news and current events: When users work with AI platforms to fact-check, especially for real-time news and ongoing current events, depending on how the question is phrased and how recent and comprehensive the AI model’s training is, the model may confidently produce misinformation that the user may spread without realizing its inaccuracies.

- Poor user experience: If an AI model regularly produces offensive, incomplete, inaccurate, or otherwise confusing content, users will likely become frustrated and choose to stop using the model and/or switch to a competitor. This can alienate your core audience and limit opportunities for building a larger audience of users.

Read next: 50 Generative AI Startups to Watch

Bottom Line: Preventing AI Hallucinations When Using Large-Scale AI Models

The biggest AI innovators recognize that AI hallucinations create real problems and are taking major steps to counteract hallucinations and misinformation, but AI models continue to produce hallucinatory content on occasion.

Whether you’re an AI developer or an enterprise user, it’s important to recognize that these hallucinations are happening, but, fortunately, there are steps you can take to better identify hallucinations and correct for the negative outcomes that accompany them. It requires the right combination of comprehensive training and testing, monitoring and quality management tools, well-trained internal teams, and a process that emphasizes continual feedback loops and improvement. With this strategy in place, your team can better address and mitigate AI hallucinations before they lead to cybersecurity, compliance, and reputation issues for the organization.

For more information about governing your AI deployment, read our guide: AI Policy and Governance: What You Need to Know