Prompt engineering is the art and process of instructing a generative AI software program to produce the output you want, accurately and efficiently.

This task is far from easy, due to the enormous range of output created by the advanced algorithms included in generative AI programs. Additionally, the vast data repositories in large language models – data stores that are growing daily – enables generative AI programs to offer an enormous range of outputs.

Consequently, effective prompt engineering requires an overall understanding of prompt engineering, knowing the key tips for a well-crafted prompt, and being aware of key use cases for an effective AI prompt.

TABLE OF CONTENTS

Understanding Prompt Engineering

Generative artificial intelligence has become ubiquitous, with a wide array of generative apps and tools for both professional and recreational use. This is largely due to the success and popularity garnered by ChatGPT, Google Bard or any AI chatbot that leverages a large language model.

Users have realized that getting the best answer from a generative AI app can be challenging. One common misunderstanding: users enter casually-worded prompts as if the generative AI program were a fast Google search. This won’t produce optimal results.

Adding to the challenge, today’s generative AI programs span all types of multimedia:

- Image generators, like Open AI Dall-E and Midjourney ,which are ideal for generating 3D assets and AI art.

- Code generators, like Copilot, which are used for generating code snippets and assisting developers in coding tasks.

- Speech synthesis models, like DeepMind’s WaveNet, are used to generate realistic human-like speech.

In sum, there’s growing realization about using generative AI tools: your output is only as good as your prompt. And your prompt must be constructed to include elements based on not just your core concept, but also what type of media (photo, video) you are using to build your content.

A generative AI program is powerful, but it cannot guess your intent – you need to tell it exactly what you want.

How to Engineer a Well-Crafted Prompt: 7 Tips

A well-engineered prompt encompasses skills and techniques that enable you to understand the capabilities and limitations of a model, and then tailor your prompt to optimize the quality and relevance of the generated output.

A carefully crafted prompt should include several core elements, including:

1) Provide Context

This is the big one. Using proper context in your prompt means including all relevant background information, from crucial details and any necessary instructions. Are you creating a prompt in the context of healthcare, or finance, or software coding? Include that context. Similarly, is your prompt designed to output historic material or a forward-looking response? Include all important time-sensitive context in your prompt’s verbiage.

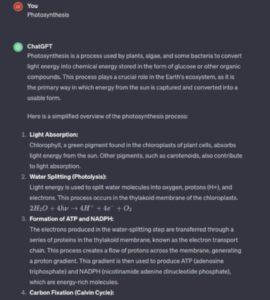

For example, you may want to generate information about the scientific process of photosynthesis. If you’re used to Google, you might simply type the prompt “photosynthesis.”

With a vague, one-word prompt, you would still get some results:

However, for a fuller, more detailed sense of the biological underpinnings of photosynthesis, look at what results from a prompt with fuller and deeper context:

2) Be Specific

Clearly define the task or question you want the AI model to address. Be highly specific about what information or output you are expecting: add more detail than you think you might need. For instance, specify if you are seeking data or any calculations in your output, and include the full array of project-related data that will enable the LLM to find the one needle in a haystack you’re searching for.

3) Add All Constraints

If there are any limitations or constraints that the AI model needs to consider, such as word count, time frame or specific criteria, make them explicit in the prompt. Realize that all generative AI software have access to an ocean of data; without very tight constraints built into your prompt, you’re asking for more output than you actually want.

4) Use System-Related Instructions

Many generative AI models have a “system” – often thought of as a “user persona” – that specific prompt instructions can influence. Use these instructions to guide the model’s behavior or style of output.

For instance, if you’re really looking for a “dad” joke, then go ahead and spell that out clearly in your prompt: “tell me a joke in the style of a dad joke.” After using this system-related instruction, you can easily produce dozens of dad jokes.

5) Experiment with Temperature and Top-K and Top-P

Adjusting parameters such as temperature, top-k, and/or top-p can control the randomness or creativity of the model’s output. As you craft your prompt, experiment with these parameters to fine-tune the generated output based on your preferences.

Temperature Value

The temperature value refers to the randomness or creativity of the generated responses. A higher temperature value, such as 0.8 or 1.0, makes the output more random and unpredictable, while a lower temperature value, such as 0.2 or 0.5, makes the output more focused and deterministic. Most likely, you’ll want to create a more formal response for a business project and a more random approach for a recreational project.

Temperature values and what they mean:

| Temperature value | Result |

|---|---|

| 0.0 to 0.3 | Formal and non-creative |

| 0.3 to 0.7 | Balanced tone |

| 0.7 to 1.0 | Creative and semi-random tone |

Top K Value

The top k value is the parameter that determines the number of next tokens most likely to be considered during text generation. Put simply, a higher top k value allows for a more diverse and creative output as more alternative tokens are considered. Conversely, a lower top k value results in more conservative and predictable responses.

Top K values and what they mean:

| Top-k range | Result |

|---|---|

| 1 to 5 | More focused and concise responses. |

| 5 to 10 | Balanced responses with a mix of common and nuanced information. Improved coherence. |

| 20+ | More diverse and detailed responses. It may include more creative elements but could be less focused. |

Top P Value

Top p value is an alternative method to control the diversity of generated responses during text generation.

Instead of considering a fixed number of most likely tokens like top k, top p sampling involves considering the tokens until their cumulative probability reaches a certain threshold or percentage. This threshold is dynamic and can vary with each token generation step.

For example, if the top-p value is set to 0.8, the model will consider tokens until the cumulative probability of those tokens reaches 80%.

Top P values and what they mean:

| Top-p range | Result |

|---|---|

| 0.1 to 0.3 | More focused responses with a narrow set of possibilities. Higher probability for common and safe responses. |

| 0.3 to 0.6 | Balanced responses with a mix of common and diverse information. Offers more flexibility in generating creative and nuanced content. |

| 0.7 to 1.0 | Greater diversity in responses. It may include more unexpected or creative elements but be less focused or coherent. |

6) Test and Iterate Your Prompt

You will likely try various prompts to more efficiently create the output you desire. This is not only a productive approach, it’s one of the most effective possible strategies for improving your prompt engineering.

So don’t hesitate to experiment – again and again – with different prompts, and then evaluate the generated output to refine and improve your prompt engineering. As prompt experts have learned, you can’t become a top-level prompt engineer without this extensive iteration.

Important point: even if you think you’re getting the output you need, don’t hesitate to dig deeper and try again. There’s no downside to continued attempts.

7) Understand Model Limitations

This is a hard fact for many prompt engineers to accept: a given generative AI model may include an excellent large language model, but that model cannot answer every question. For instance, a large language model that was built to serve the healthcare market may not be able to handle the most upper level finance-related queries that an MBA can type into it.

So it’s essential to engineer the best prompts within the limitations and capabilities of the specific AI model you are using. This will help you design prompts that work well with the model’s strengths and mitigate potential pitfalls of limited outputs.

Use Cases for Prompt Engineering

If you’re wondering about the possible use cases for prompt engineering, realize that the number is far from fixed – the number of potential use cases grows constantly.

As noted below, they currently range from creating blog posts to full-length content summarization. As the generative AI tools grow – and as the field of prompt engineering advances – the number of use cases expands across finance, manufacturing, healthcare and many more fields.

A sampling of current use cases for prompt engineering:

| Application/Use cases | Description |

|---|---|

| Content generation | Create blog posts, articles and creative writing |

| Code generation | Code generation and debugging assistance |

| Language translation | Prompt the model to translate text between different languages |

| Conversational agents | Develop conversational agents like chatbots |

| Idea generation | Generate ideas for various projects, businesses or creative endeavors |

| Content summarization | Summarize lengthy documents, articles or reports |

| Simulation and scenario exploration | What-If Analysis – explore hypothetical scenarios and their potential outcomes |

| Entertainment | Generate jokes, puns, or humorous content |

| Persona creation | Generate personas for storytelling, gaming, or role-playing scenarios |

| General question answering | Pose questions to the model to gather information on a wide range of topics |

5 Top Prompt Engineering Tools and Software

- Azure Prompt Flow: Powered by LLMs, the Azure Prompt Flow is currently one of the most sophisticated and feature-rich prompt engineering tools. It enables you to create executable flows that connect LLMs, prompts and Python tools through a visualized graph.

- Agenta: An open-source tool designed for building LLM applications. It offers resources for experimentation, prompt engineering, evaluation and monitoring, allowing you to develop and deploy LLM apps.

- Helicone.ai: This is a Y Combinator-backed open-source platform for AI observability. It provides monitoring, logging and tracing for your LLM applications, which can help write prompts.

- LLMStudio: Developed by TensorOps, LLMStudio is an open-source tool designed to streamline your interactions with advanced language models such as OpenAI, VertexAI, Bedrock and even Google’s PaLM 2.

- LangChain: This is a framework for developing applications powered by language models. It includes several modules such as LangChain libraries, LangChain templates, LangServe and LangSmith, which is a platform designed to simplify debugging, testing, evaluating and monitoring large language model applications.

Bottom Line: Effective Prompt Engineering

Prompt engineering is an art and science that requires skills, reasoning and creativity. It is a dynamic skill that merges the precision of science with the artistry of advanced language. When mastered and used effectively, it becomes a powerful tool for harnessing the capabilities of generative AI software.

Of course prompt engineering as a field is brand new – the term hardly existed even as recently as the end of 2022. So be aware that the strategies and best practices for prompt engineering will change rapidly in the months and years ahead. Also realize that prompt engineering is a valuable skill in the AI market; if you’re a top prompt engineering, there will likely be an open job for you.

To gain a fuller understanding of generative AI, read our guide: What is Generative AI?